PhD Student Maryam Naghizadeh invited to present at CGI’19

News

PhD student Maryam Naghizadeh was invited to present her work with Prof. Cosker at CGI’19. Computer Graphics International is one of the oldest international annual conferences in Computer Graphics and one of the most important ones worldwide, founded by the Computer Graphics Society (CGS).

Multi-character Motion Retargeting for Large-Scale Transformations

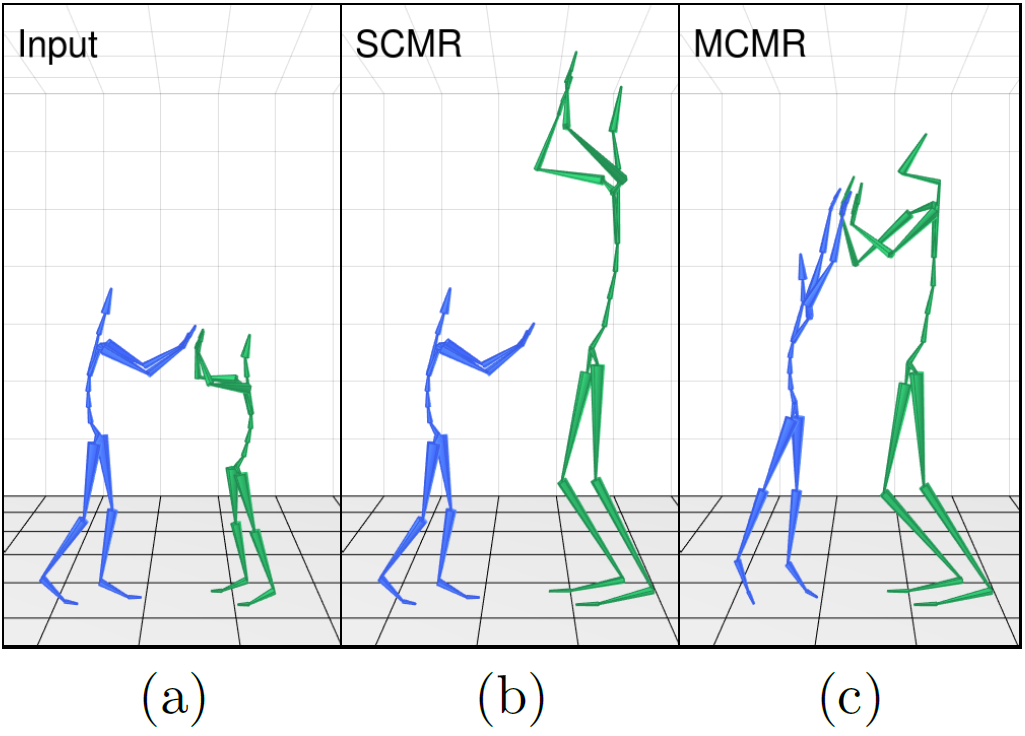

Single-character motion retargeting (SCMR) is “the problem of adapting an animated motion from one character to another” [1]. SCMR is a widely studied field and has many applications ranging from animation to robotics. However, many of these applications require more than one character to be retargeted together, e.g. fighting characters in an animation or collaborative robot tasks. SCMR is lacking when it comes to solving such problems since retargeting each character independently results in interaction loss among the characters. We refer to these problems as multi-character motion retargeting (MCMR), which aims at generating motion for multiple target characters given the motion data for their corresponding source subjects. MCMR is different from individually retargeting multiple characters at the same time, which is illustrated in Fig. 1. When the green character from the input is individually retargeted to 2.0x its original size via SCMR, the high-five interaction between the blue and green characters is lost. However, this interaction is successfully maintained when an MCMR solution is used to perform the retargeting. MCMR is a challenging problem because it requires retargeting each character’s motion correctly while maintaining the nature of the interaction between them.

Fig 1. The green character from the input (a) is retargeted to 2.0x its original size via SCMR (b) and MCMR (c). The interaction between blue and green characters is lost in SCMR while MCMR successfully preserves the high-five.

Fig 1. The green character from the input (a) is retargeted to 2.0x its original size via SCMR (b) and MCMR (c). The interaction between blue and green characters is lost in SCMR while MCMR successfully preserves the high-five.

MCMR is even more difficult when the scale change for one character is very different from the other one. Such cases are common in games and movies. For example, movies like Avatar and Lord of the Rings use motion retargeting to drive characters that are much taller or shorter than the human actors controlling them. Current solutions include using physical props to direct actor eye-lines towards the correct target above the other actors body, or having actors stand on boxes or stilts. Even so, a significant amount of clean-up is required, increasing post-processing time considerably. Moreover, acting under such circumstances is quite inconvenient for the actors.

Method Overview:

In this paper, we propose an algorithm for large-scale MCMR that uses distance-based interaction meshes with anatomically-based stiffness weights. Existing MCMR solutions fail under large-scale character changes because they are tailored to cases where the height relationship is similar between source and target characters. These algorithms cannot adapt to increasing scale changes mostly because they do not consider human anatomy in their work and assume equal freedom of movement for all joints along the human skeleton. In order to attribute different levels of motion freedom for skeletal joints, we use stiffness weights, which have been successfully employed in large mesh deformations [2]. We use stiffness weights to define how costly it is for the interaction mesh to deform around each joint. The intuition behind stiffness weights is that they act like joint angle constraints; when a mesh does not deform around a joint after retargeting, the angles between its neighbors and itself do not change either. Our proposed method can successfully retarget motion for characters under large-scale transformations with greatly reduced computational time in comparison to existing MCMR approaches. To summarize, our contributions include:

1- Proposing the first MCMR algorithm tailored for large-scale character transformations.2- Introducing an interaction mesh structure suitable for small and large-scale character transformations.3- Introducing anatomically-based joint stiffness weights that improve retargeting quality while significantly reducing retargeting time.

Fig. 2. Method Overview

Figure 2 depicts an overview of our method. It receives a motion sequence as input which contains motion data for multiple subjects. Each subject is associated with a target character and the objective of the algorithm is to retarget the input motion sequence for target characters such that the spatial relationship among the subjects are preserved. The algorithm consists of two stages: pre-processing and motion retargeting. During the pre-processing stage, the algorithm computes the Euclidean distance between all joint pairs for each frame and stores it in a distance matrix. These matrices are further used to build an interaction mesh for each frame. In this paper, we introduce a new distance-based interaction mesh structure that improves retargeting quality by prioritizing local connections over global ones.

During the motion retargeting stage, we follow an iterative approach similar to [3]. At each morph step, our algorithm minimizes a space-time optimization problem embodied in an energy function. The energy function has three terms: hard constraints, acceleration energy and deformation energy. Hard constraints ensure that source subject bone lengths are properly scaled to their corresponding target characters. Acceleration energy guarantees smooth transitions between frames. Finally, deformation energy makes sure that Laplacian deformation of the interaction meshes are minimal. In our method, we introduce a new anatomically-based term for mesh deformation that includes a stiffness matrix. This matrix defines a stiffness weight for each joint, which allows for it to be less or more loyal to the interaction mesh structure than others. This parameter leads to enhanced results for our algorithm while reducing retargeting time significantly.

Results:

Figure 3 shows our results for three sample frames from high-five, dance and Ogoshi sequences. The first row (highlighted by yellow) displays the characters before retargeting. The second (highlighted by pink) and third (highlighted by red) rows show the result of retargeting the green subject to 2.0x its original size using the method in [3] (SRP-WOS) and our method (DB-WS), respectively. The height relationship between the subjects changes after doubling the green subject’s scale. SRP-WOS fails at adapting to the new height relationship between the characters and tries to maintain the original relationship by squashing the green character along the spine. However, our method successfully adapts to the new scale of the green character by bending the knee joints when required and keeping the spine joints intact.

Fig. 3. Results

Fig. 3. Results

References:

[1] Gleicher, M., 1998, July. Retargetting motion to new characters. In Proceedings of the 25th annual conference on Computer graphics and interactive techniques (pp. 33-42). ACM.

[2] Müller, M. and Gross, M., 2004, May. Interactive virtual materials. In Proceedings of Graphics Interface 2004 (pp. 239-246). Canadian Human-Computer Communications Society.

[3] Ho, E.S., Komura, T. and Tai, C.L., 2010, July. Spatial relationship preserving character motion adaptation. In ACM Transactions on Graphics (TOG) (Vol. 29, No. 4, p. 33). ACM.

Naghizadeh M., Cosker D. (2019) Multi-character Motion Retargeting for Large-Scale Transformations. In: Gavrilova M., Chang J., Thalmann N., Hitzer E., Ishikawa H. (eds) Advances in Computer Graphics. CGI 2019. Lecture Notes in Computer Science, vol 11542. Springer, Cham